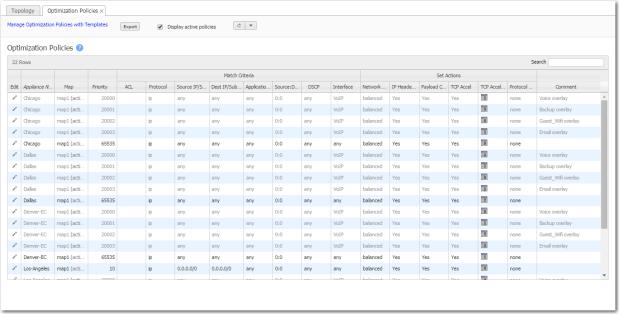

The Optimization Policies report displays a polled, read-only view of the Optimization policy entries that exist on the appliance(s). This includes the appliance-based defaults, entries applied manually (via the WebUI or CLI), and entries that result from applying an Orchestrator Optimization Policy template.

Use the Templates tab to create and manage Optimization policies.

|

n

|

Network Memory addresses limited bandwidth. This technology uses advanced fingerprinting algorithms to examine all incoming and outgoing WAN traffic. Network Memory localizes information and transmits only modifications between locations.

|

|

•

|

Maximize Reduction optimizes for maximum data reduction at the potential cost of slightly lower throughput and/or some increase in latency. It is appropriate for bulk data transfers such as file transfers and FTP, where bandwidth savings are the primary concern.

|

|

•

|

Minimize Latency ensures that Network Memory processing adds no latency. This may come at the cost of lower data reduction. It is appropriate for extremely latency-sensitive interactive or transactional traffic. It's also appropriate when the primary objective is to to fully utilize the WAN pipe to increase the LAN-side throughput, as opposed to conserving WAN bandwidth.

|

|

•

|

Balanced is the default setting. It dynamically balances latency and data reduction objectives and is the best choice for most traffic types.

|

|

•

|

Disabled turns off Network Memory.

|

|

n

|

IP Header Compression is the process of compressing excess protocol headers before transmitting them on a link and uncompressing them to their original state at the other end. It's possible to compress the protocol headers due to the redundancy in header fields of the same packet, as well as in consecutive packets of a packet stream.

|

|

n

|

Payload Compression uses algorithms to identify relatively short byte sequences that are repeated frequently. These are then replaced with shorter segments of code to reduce the size of transmitted data. Simple algorithms can find repeated bytes within a single packet; more sophisticated algorithms can find duplication across packets and even across flows.

|

|

n

|

TCP Acceleration uses techniques such as selective acknowledgements, window scaling, and message segment size adjustment to mitigate poor performance on high-latency links.

|

|

n

|

Protocol Acceleration provides explicit configuration for optimizing CIFS, SSL, SRDF, Citrix, and iSCSI protocols. In a network environment, it's possible that not every appliance has the same optimization configurations enabled. Therefore, the site that initiates the flow (the client) determines the state of the protocol-specific optimization.

|

|

•

|

If you are using Orchestrator templates to add route map entries, Orchestrator will delete all entries from 1000 – 9999, inclusive, before applying its policies.

|

|

•

|

You can create rules from 1 – 999, which have higher priority than Orchestrator template rules.

|

|

•

|

Similarly, you can create rules from 10000 – 65534 which have lower priority than Orchestrator template rules.

|

|

n

|

To allow any IP address, use 0.0.0.0/0 (IPv4) or ::/0 (IPv6).

|

|

n

|

|

n

|